Game-Changer: New AI Stock Emerges with Chips Claiming 20x Nvidia’s Speed

Silicon Shakeup: Startup Claims Processing Revolution

While Wall Street analysts scramble to update their price targets—because clearly they saw this coming all along—a new contender enters the AI hardware arena with bold performance claims. The architecture reportedly bypasses traditional bottlenecks that have limited chip performance for decades.

Raw Speed Meets Market Reality

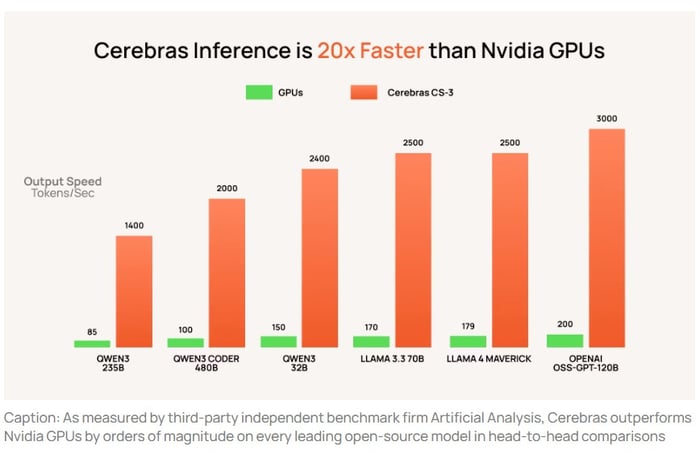

Twenty times faster than industry leader Nvidia? That's the ambitious benchmark being thrown around. The technology cuts through computational delays that typically plague machine learning workloads, potentially reshaping how AI models get trained and deployed.

Investors are already circling—some genuinely excited about the technology, others just relieved to have something new to hype after missing the early crypto boom. The real test comes when these chips face actual AI workloads outside controlled lab environments.

Market Impact and Industry Response

If these performance claims hold under real-world scrutiny, we're looking at more than just another tech stock—we're witnessing a potential paradigm shift in computational infrastructure. Nvidia's dominance faces its most credible challenge yet, though converting technical superiority into market share remains the ultimate hurdle.

Remember when everyone thought blockchain would revolutionize everything? This actually might.

Cerebras' wafer-scale chip explained: One giant engine for AI

To understand why Cerebras is generating so much buzz, investors need to look at how it's breaking the rules of traditional chip design.

Nvidia's GPUs are small but powerful processors that must be clustered -- sometimes in the tens of thousands -- to perform the enormous calculations required to train modern AI models. These clusters deliver incredible performance, but they also introduce inefficiencies. Each chip must constantly pass data to its neighbors through high-speed networking equipment, which creates communication delays, drives up energy costs, and adds technical complexity.

Cerebras turned this model upside down. Instead of linking thousands of smaller chips, it has a single, massive processor the size of an entire silicon wafer -- aptly named the Wafer Scale Engine. Within this one piece of silicon sit hundreds of thousands of cores that work together seamlessly. Because everything happens on a unified architecture, data no longer needs to bounce between chips -- dramatically boosting speed while cutting power consumption.

Image source: Getty Images.

Why Cerebras thinks it can outrun Nvidia

Cerebras' big idea is efficiency. By eliminating the need for inter-chip communication, its wafer-scale processor keeps an entire AI model housed within a single chip -- cutting out wasted time and power.

That's where Cerebras' claim of 20x faster performance originates. The breakthrough isn't about raw clock speed; rather, it's about streamlining how data moves and eliminating bottlenecks.

Data source: Cerebras.

The practical advantage to this architecture is simplicity. Instead of managing, cooling, and synchronizing tens of thousands of GPUs, a single Cerebras system can occupy just on one rack and be ready for deployment -- translating to dramatic savings on AI infrastructure costs.

Why Nvidia still reigns

Despite the hype, Cerebras still carries risk. Manufacturing a chip this large is an engineering puzzle. Yield rates can fluctuate, and even a minor defect anywhere on the wafer can compromise a significant portion of the processor. This makes scaling a wafer-heavy model both costly and uncertain.

Nvidia remains the undisputed leader in AI computing. Beyond its powerful hardware, Nvidia's CUDA software platform created a deeply entrenched ecosystem on which virtually every major hyperscaler builds its generative AI applications. Replacing this kind of competitive moat requires more than cutting-edge hardware -- it demands a complete shift in how businesses design and deploy AI, forcing them to consider the operational burden of switching costs.

That said, the total addressable market (TAM) for AI chips is expanding rapidly, leaving room for new architectures to coexist alongside incumbents like Nvidia. For instance,'s tensor processing units (TPUs) are tailored for deep learning tasks, whereas Nvidia's GPUs serve as versatile, general-purpose workhorses. This dynamic suggests that Cerebras could carve out its own niche within the AI chip realm without needing to dethrone Nvidia entirely.

How to invest in Cerebras stock

Cerebras previously explored an initial public offering (IPO) and even published a draft S-1 filing late last year. However, following a recent $1.1 billion funding round, the company appears to have put its IPO plans on hold. For now, investing in Cerebras is largely limited to accredited investors, venture capital (VC) firms, and private equity funds.

For everyday investors, the more practical approach is to stick with established chip leaders such as Nvidia,,, or ancillary partners likeor-- all of which are poised to benefit from the explosive growth of AI infrastructure spending.